MultiMediate Grand Challenge 2024

-

MultiMediate’24: Multi-Domain Engagement Estimation

Proceedings of the 32nd ACM International Conference on Multimedia, pp. 11377 - 11382, 2024.

Multi-Domain Engagement Estimation

Estimating the momentary level of participant's engagement is an important prerequisite for assistive systems that support human interactions. Previous work has addressed this task in within-domain evaluation scenarios, i.e. training and testing on the same dataset. This is in contrast to real-life scenarios where domain shifts between training and testing data frequently occur. With MultiMediate'24, we present the first challenge addressing multi-domain engagement estimation. As training data, we utilise the NOXI database of dyadic novice-expert interactions. In addition to within-domain test data, we add two new test domains. First, we introduce recordings following the NOXI protocol but covering languages that are not present in the NOXI training data. Second, we collected novel engagement annotations on the MPIIGroupInteraction dataset which consists of group discussions between three to four people. In this way, MultiMediate'24 evaluates the ability of approaches to generalise across factors such as language and cultural background, group size, task, and screen-mediated vs. face-to-face interaction.

Organisers

Cognitive Assistants

DFKI GmbH

Germany

Human-Computer Interaction and Cognitive Systems

University of Stuttgart

Germany

Human Centered Artificial Intelligence

Augsburg University

Germany

MultiMediate Grand Challenge 2023

-

MultiMediate’23: Engagement Estimation and Bodily Behaviour Recognition in Social Interactions

Proceedings of the 31st ACM International Conference on Multimedia, pp. 9640–9645, 2023.

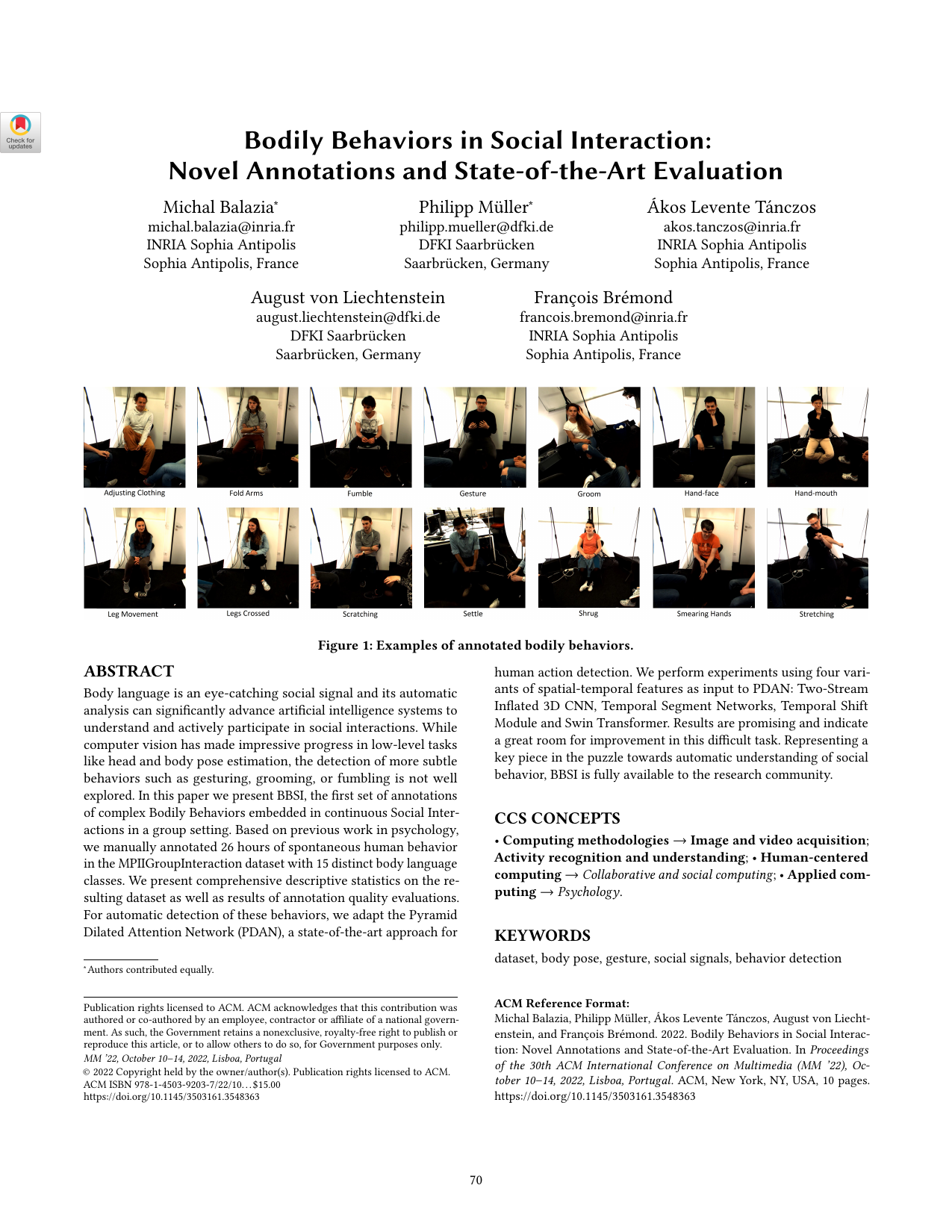

Backchannel Detection

Bodily behaviours like fumbling, gesturing or crossed arms are key signals in social interactions and are related to many higher-level attributes including liking, attractiveness, social verticality, stress and anxiety. While impressive progress was made on human body- and hand pose estimation the recognition of such more complex bodily behaviours is still underexplored. With the bodily behaviour recognition task, we present the first challenge addressing this problem. We formulate bodily behaviour recognition as a 14-class multi-label classification. This task is based on the recently released BBSI dataset (Balazia et al., 2022). Challenge participants will receive 64-frame video snippets as input and need output a score indicating the likelihood of each behaviour class being present. To counter class imbalances, performance will be evaluated using macro averaged average precision.

Engagement Estimation

Knowing how engaged participants are is important for a mediator whose goal it is to keep engagement at a high level. Engagement is closely linked to the previous MultiMediate tasks of eye contact- backchannel detection. For the purpose of this challenge, we collected novel annotations of engagement on the Novice-Expert Interaction (NoXi) database (Cafaro et al., 2017). This database consists of dyadic, screen-mediated interactions focussed on information exchange. Interactions took place in several languages, and participants were recorded with video cameras and microphones. The task includes the continuous, frame-wise prediction of the level of conversational engagement of each participant on a continuous scale from 0 (lowest) to 1 (highest). Participants are encouraged to investigate multimodal as well as reciprocal behaviour of both interlocutors. We will use the Concordance Correlation Coefficient (CCC) to evaluate predictions.

Organisers

Cognitive Assistants

DFKI GmbH

Germany

Human-Computer Interaction and Cognitive Systems

University of Stuttgart

Germany

Human Centered Artificial Intelligence

Augsburg University

Germany

MultiMediate Grand Challenge 2022

-

MultiMediate’22: Backchannel Detection and Agreement Estimation in Group Interactions

arXiv:2209.09578, pp. 1–6, 2022.

-

The NoXi Database: Multimodal Recordings of Mediated Novice-Expert Interactions

Proceedings of 19th ACM International Conference on Multimodal Interaction, pp. 350–359, 2017.

-

Bodily Behaviors in Social Interaction: Novel Annotations and State-of-the-Art Evaluation

Proceedings of the 30th ACM International Conference on Multimedia, pp. 70–79, 2022.

Backchannel Detection

Backchannels serve important meta-conversational purposes like signifying attention or indicating agreement. They can be expressed in a variety of ways - ranging from vocal behaviour (“yes”, “ah-ha”) to subtle nonverbal cues like head nods or hand movements. The backchannel detection sub-challenge focuses on classifying whether a participant of a group interaction expresses a backchannel at a given point in time. Challenge participants will be required to perform this classification based on a 10-second context window of audiovisual recordings of the whole group.

Agreement Estimation

A key function of backchannels is the expression of agreement or disagreement towards the current speaker. It is crucial for artificial mediators to have access to this information to understand the group structure and to intervene to avoid potential escalations. In this sub-challenge, participants will address the task of automatically estimating the amount of agreement expressed in a backchannel. In line with the backchannel detection sub-challenge, a 10-second audiovisual context window containing views on all interactants will be provided.

Organisers

Cognitive Assistants

DFKI GmbH

Germany

Human-Computer Interaction and Cognitive Systems

University of Stuttgart

Germany

MultiMediate Grand Challenge 2021

-

MultiMediate: Multi-modal Group Behaviour Analysis for Artificial Mediation

Proc. ACM Multimedia (MM), pp. 4878–4882, 2021.

Eye Contact Detection Sub-challenge

This sub-challenge focuses on eye contact detection in group interactions from ambient RGB cameras. We define eye contact as a discrete indication of whether a participant is looking at another participants’ face, and if so, who this other participant is. Video and audio recordings over a 10 second context window will be provided as input to provide temporal context for the classification decision. Eye contact has to be detected for the last frame of this context window, making the task formulation also applicable to an online prediction scenario as encountered by artificial mediators.

Next Speaker Prediction Sub-challenge

In the next speaker prediction sub-challenge, approaches need to predict which members of the group will be speaking at a future point in time. Similar to the eye contact detection sub-challenge, video and audio recordings over a 10 second context window will be provided as input. Based on this information, approaches need to predict the speaking status of each participant at one second after the end of the context window.

Evaluation of Participants’ Approaches

For the purpose of this challenge we model the next speaker detection problem as a multi label problem. Hence a model for this task should predict a binary value (speaking = 1, not-speaking = 0) for each participant, for a given sample. As a metric to compare the submitted models we will use the unweighted average recall over all samples (see scikit recall_score(y_true, y_pred, average='macro') function).

For the eye contact detection task the problem is modeled as a multi class problem. Given a specific participant, a submitted model should predict with what other participant he or she is making eye contact. The task is modeled using five classes - one for each participants position (classes 1-4) and an additional class for no eye contact (class 0). To evaluate the performance of this task we will use accuracy as a metric (see scikit accuracy_score(y_true, y_pred) function).

Participants will receive training and validation data that can be used to build solutions for each sub-challenge (eye contact detection and next speaker prediction). The evaluation of these approaches will then be performed remotely on our side with the unpublished test portion of the dataset. For that, participants will create and upload docker images with their solutions that are then evaluated on our systems (for more information regarding the process visit this link).